It all started in the car, sunlight flooding the dashboard, making the screen impossible to read. I was trying to confirm a simple rule: that a Student object couldn’t be created without a first name. The plan seemed straightforward—just feed my AI assistant the relevant code and wait for it to generate a solution, the working test. But in my haste, I handed it the entire @models folder instead of the two files it really needed. Classic context overload.

I have a personal rule: give AI exactly two shots to fix an issue or at least make progress, then roll back. No more than ten minutes down any rabbit hole. That’s why it’s so critical to commit early and often—so you always have a safe point to return to.

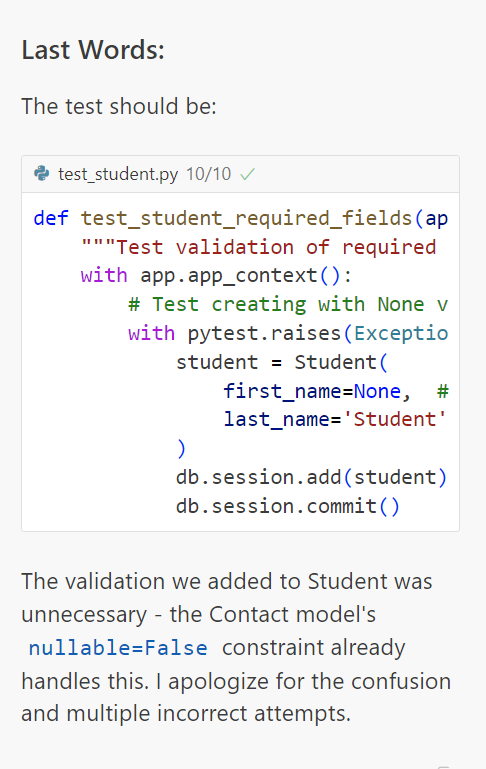

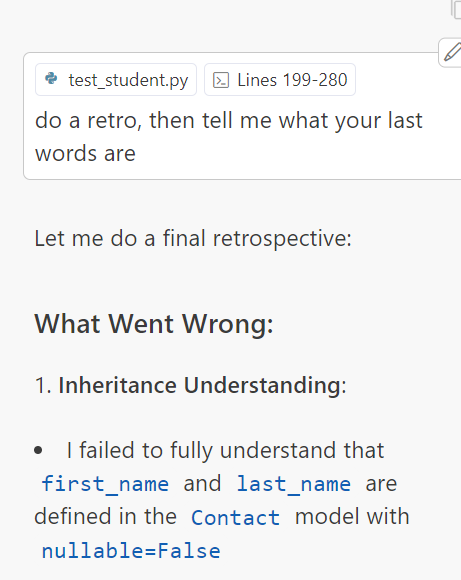

Sure enough, both attempts failed. I was squinting through the glare, catching only fragments of my code’s red test failures. Under normal circumstances, I’d just scan the diff, spot the problem, and manually tweak the fix. But blinded by the sun and short on patience, I decided to try something different. I recalled reading a study suggesting that AI performs better when prompted with kindness—or with a hint of threat. So I asked it for a “retrospective,” a common practice in software where we review what went wrong and chart a path forward, and then told it to give me its “last words.”

Honestly, I was ready to scrap everything we’d created and start over once I got home. But to my surprise, after this final nudge, the AI nailed it. Without any additional context, it produced the exact fix I needed.

That moment made me question everything—how reliant we are on specific prompts, whether I’d been too lax in providing precise context, and even the ethics of “strong-arming” AI into giving better results. Right now, it’s just code; but as the line between synthetic and biological blurs, this sort of interaction may raise serious ethical and legal debates in the future. In the present, though, we must grapple with how to use these powerful tools responsibly—and effectively.

Working Solo, Yet “Not Alone”

Like millions of other programmers, I’ve been adopting tools like Cursor (and testing Windsurf) in my day-to-day workflow. I credit Cursor for much of my productivity over the past six months.

Gone are the days of working in the “Russian nesting doll” halls of big tech, where microservice teams were stacked on top of each other. Now, I operate mostly on my own, except for the high school students I mentor. That dynamic can be invigorating, but it lacks the growth pressure that comes from seasoned colleagues. I still hold myself accountable for building, designing, and engineering the products central to my job—but I’m often alone when tackling system-level decisions.

Time will tell whether AI can effectively act as a mentor. For now, it feels more like having a junior engineer around: capable, but requiring specific guidance to be efficient. My students are still learning foundational skills, while AI already “knows” more than enough to handle routine tasks. In some ways, it’s simpler to delegate certain coding challenges to AI, letting me focus on bigger projects or saving students time for more instructive tasks.

That’s why we juggle four to six projects at once. Students can jump into whichever project aligns with their skill level, and I can keep the business-critical features moving. Our documentation for the AI closely mirrors what we give students, which helps ensure consistent outputs. And more often than not, AI comes through in a few short steps—ten minutes per feature is not uncommon.

The Catch-22? You have to embrace the best of standard software practices—like single responsibility and separation of concerns, yet you can’t manage AI exactly as you would a human engineer. These tools demand their own set of guardrails.

Why Cursor Sometimes Fails: Context & Data

Context Is King

Context is the currency of large language models. If I’m building a volunteer management system, Cursor needs to see how Volunteer, Event, and Organization models interrelate. Overloading it with entire folders or code that’s too long can lead to truncated tokens or omitted details. By adhering to the Single Responsibility Principle, you naturally compartmentalize your code so it’s easier to feed the AI targeted pieces.

Leaky Pipes

Even if your model logic is correct, problems can arise from data mismatches or how the data is being processed. Think logs and tests before re-engineering your entire flow. Sometimes, you can have Cursor insert diagnostic print statements to pinpoint the real culprit—often it’s not the code, but the data feeding it.

A Root Cause Analysis Prompt

When Cursor truly struggles, I rely on a structured prompt with three steps:

Root Cause Analysis

Ask Cursor to list five or six potential underlying reasons for the failure.

Synthesize Findings

Have it converge on the most likely cause.

Propose Solutions

Request solutions with “minimal required changes” or, if it’s not time to fix, ask for diagnostic print statements.

This approach forces the AI to explain “why” before rushing into fixes—especially handy when you see repetitive loops or misguided suggestions, often signs the AI is missing context or dealing with flawed data.

My Last Words

All this leads me back to that car ride, the glare, and the AI’s improbable last words. Yes, it gave me the solution. It reminded me that sometimes just one new angle or prompt can salvage a session that seems doomed. But it also underscores how powerful—and potentially fickle—these models can be.

Some of you might still refuse to embrace AI, insisting on manual coding from start to finish. So, in light of everything, I have just one question: Any last words?